“Rapidly switching anterior/posterior segment optical coherence tomography.” It may be a mouthful, but it can achieve something very direct and critical: image the structure of the retina and cornea of the human eye.

At Duke University, with the help of Zemax’s OpticStudio™ software, a graduate student and his team have developed the first-ever handheld probe that can image the front and back of the eye in 3D in rapid succession. This will better permit effective, sight-saving diagnosis of a broad range of patients, including military, elderly, and young children.

Derek Nankivil is a fifth-year PhD student studying biomedical engineering at the Duke University School of Engineering. Partnering with the Fitzpatrick Institute for Photonics and working under Professor Joseph Izatt, Derek responded to a prompt from TATRC, the Telemedicine and Advanced Technology Research Center. This Department of Defense project aims to speed promising medical innovations to the aid of our servicemen and servicewomen.

We had a chance to explore with Derek how this project evolved—and how he used our software to make the fastest, smallest, and first-ever rapidly switching 3D imaging device for eye imaging.

Zemax: Before we go into specifically how this project came about and what it does, can you help us understand a little about this technology and what you do?

Nankivil: I know there are a lot of acronyms and terms in this field! My specialty is in optical and mechanical design for ophthalmic applications. I’ve focused on optical coherence tomography (OCT), which is a non-invasive imaging method that uses light waves to image the retina in a series of cross-sections to compose a complete 3D image.

The retina is the light-sensitive tissue lining the back of the eye, and OCT takes pictures in “slices” that can map each of the retina’s distinct layers. This is critical for diagnosis and treatment of eye diseases and injuries. I also specialize in scanning laser ophthalmoscopy, or SLO—another imaging modality. This one uses confocal laser scanning microscopy to obtain high-resolution optical images at selected depths. This fast, non-invasive, 2D imaging technique is used to produce images with cellular-level resolution of the living retina.

There were handheld probes before, but we built the first one that rapidly sequentially images both the front and back of the eye.

I’ve primarily studied swept-source OCT, or SSOCT—a type of OCT modality where the wavelength of the laser is swept in time. This approach provides higher speeds and a deeper imaging range, where the extra range is particularly useful for imaging a larger portion of the eye or to image several parts of the eye simultaneously. We can focus on the cellular layer responsible for vision, the photoreceptor layer of the retina.

My main focus has been on the development of new types of SLO and SSOCT for handheld applications. These are such important modalities because they're non-invasive, high speed, and high resolution.

Making them handheld for use in a wider range of settings has been a focus of mine. There were handheld probes before, but we built the first one that rapidly sequentially images both the front and back of the eye.

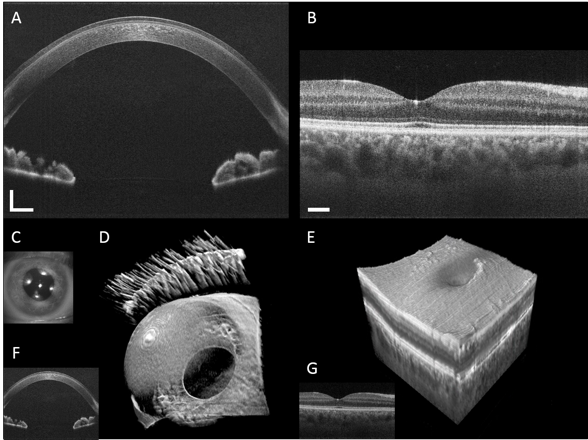

Anterior segment and retinal images from a healthy volunteer.

Anterior segment and retinal images from a healthy volunteer.

Zemax: Is OCT used mostly diagnostically, or does it help with research?

Nankivil: OCT is widely used worldwide for diagnostics and to monitor disease progression and response to therapy. And although SLO has shown great promise, it has yet to be adopted widely in the clinic. Both OCT and SLO have been used in vision science as well. Diagnostics often get more attention, but researchers use SLO to examine individual cones cells in the eye to understand how color vision works.

Vision scientists use OCT to better understand the accommodative mechanism. Accommodation is the means by which the eye focuses on objects at different distances. To read up close, you have to accommodate, which involves relaxation of a sphincter-like muscle that pulls on the fibers that connect to the crystalline lens inside the eye. When we accommodate, those fibers are loosened so the lens can assume a more rounded shape, which increases the optical power of the eye.

In the common condition presbyopia, the lenses in older people lose the ability to change shape in this way, and we don't fully understand why. There are still details of that mechanism that are poorly understood.

With 3D quantitative information, we can look at this mechanism and study remedies—corneal procedures, inlays, contact lenses and more. We can determine how well a therapy worked, or why it failed.

Zemax: How did this particular project come about?

Nankivil: The Department of Defense-funded TATRC is always seeking to translate research into new products and breakthroughs that will enhance military health. We received a telemedicine grant from the DOD to develop a field-deployable diagnostic instrument for the eye.

In field hospitals, in places like Afghanistan or Iraq, a soldier may have anything as minor as sand injury due to sandstorm, or some type of blast injury. If it’s a surface corneal abrasion, doctors know they can treat with steroid-plus-antibiotic eyedrops for a week and the patient will fully recover. But you'd like to be able to tell whether anything more serious happened—right there in the field.

Trauma can occur to the front or back of the eye. Often, for example, with a blast injury the impact can cause retinal detachment in back of the eye. Being able to determine that in the field versus “this is a corneal laceration, we don't have to fly back to an eye hospital” would be enormously helpful.

The concept was that it had to be easy to operate, but also employ telemedicine—meaning someone else could remotely view or live-view the imaging session. That would allow for better judgment about when we need to fly someone back to a more formal eye hospital setting for full workup.

My colleagues and I had already published two research papers in which we used these technologies in handheld devices. The idea was to leverage the experience we gathered developing these instruments. We now wanted to create the same results—but with an even lighter and higher-speed OCT system that could image both the front and back of the eye.

Zemax: And you achieved this goal.

Nankivil: Yes. In our prior work, we were the first ever to image both the front and back of the eye simultaneously. We employed an interesting property of the laser to do it in an efficient way, but the system was rather large, far from handheld form.

Our previous research really created stepping stones to being able to achieve this objective, and we were able to leverage that with this project.

In our first design, we demonstrated the first ever handheld dual SLO/OCT system. We combined a commercial off-the-shelf spectrometer-based OCT system with custom optical and mechanical design to make a probe small enough for handheld operation. It used two scanners, so it’s substantially larger than our most recent version. Like early-release video cameras, it didn’t have the most ergonomic design.

Also, the imaging speed was a bit slower than we would like. In order to get a full 3D image, I need to acquire many cross-sectional images, and even though each individual cross-section is obtained very quickly, it can take a few seconds to get a whole volume.

Fortunately, the 2D SLO imaging system is much faster than the rate at which I can acquire an OCT volume, so we showed how we can use the faster SLO to correct for motion artifacts in the 3D volume acquired by OCT.

From there we worked to add color capability to the probe, demonstrating the first true-color handheld SLO system. We discovered that, with a small optical redesign on front end to correct for chromatic error of the eye, along with a change to the light source and collection path, we could provide full color imaging. Color SLO had been done, but misrepresentation of color was common.

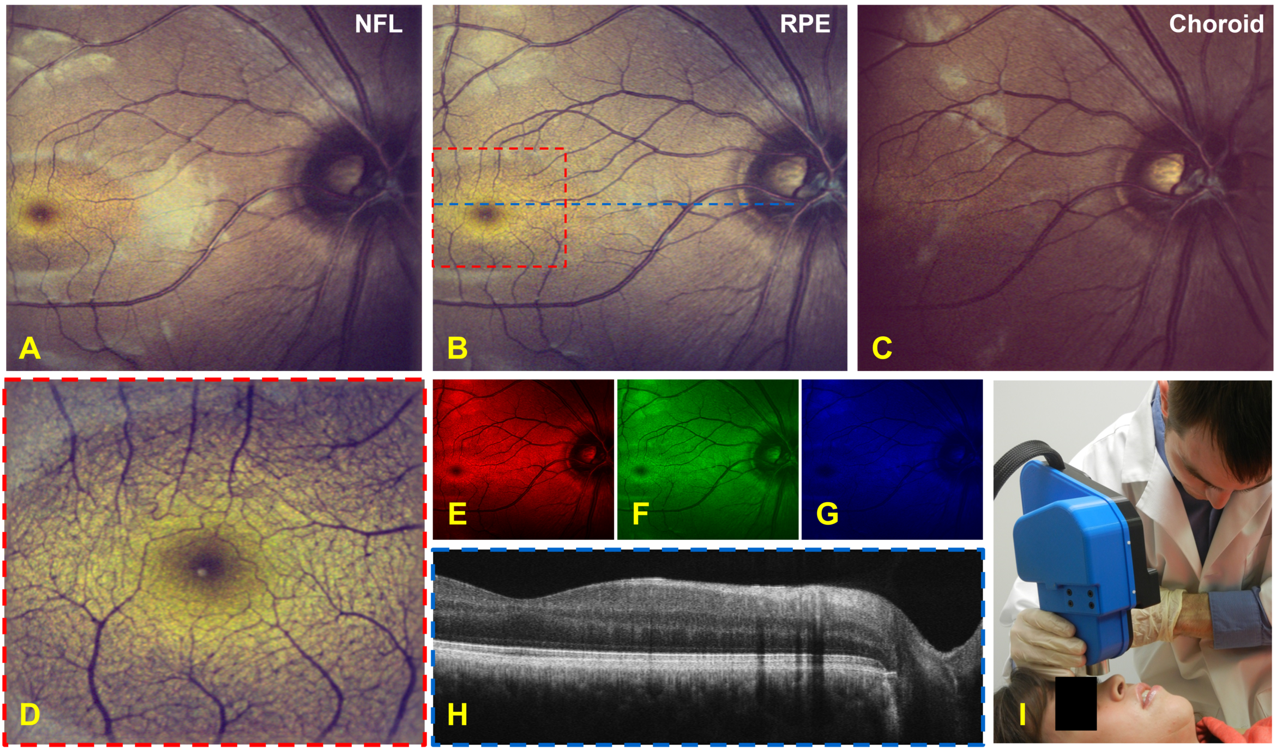

Imaging results from a human volunteer taken with the handheld color SLO and OCT system.

Imaging results from a human volunteer taken with the handheld color SLO and OCT system.

Still, the second probe also only scanned the posterior eye and the ergonomics were not ideal. The most recent probe, our third, was the fastest and smallest of all, and the one where we really innovated to create the posterior/anterior dual capability.

Zemax: How did you approach the challenge of creating a smaller, lighter design that was even more powerful and accurate than your previous iterations?

Nankivil: In the development of any design or system, we start with design specs and determine the performance metrics that we want to satisfy. In our case, we wanted to rapidly, sequentially image both the front and back of the eye with a minimal delay in between each mode. We wanted this delay to be less than one second.

The reason you want to go rapidly sequential rather than simultaneous is to get the brightest images possible. We were the first ever to do it simultaneously, too, but as I said, those probes were bigger and bulkier and the images were dimmer. Because there are limits to the amount of light or power you can safely deliver to the eye, we decided to go sequential so we could make the images brighter.

Next, we wanted to include an iris camera to guide gross alignment with the eye, for simplest use of the probe even by someone who is less experienced with this kind of device. With a simple two-dimensional image of the iris in the camera, the user only needs to get the circle of the iris in the viewfinder to align the device properly.

We also wanted the whole thing to be as light as possible. We set an upper limit of 900 grams—two pounds. Other commercial handhelds have been notably heavier—while only imaging one part of the eye at a time. You’d have to set it down, unscrew a lens and screw another back in to change modes.

In addition, we wanted reasonable field of view on the retina and enough to see the entire cornea. And we wanted diffraction-limited performance, which is the best you can do when imaging the retina—the best resolution you can get optically for a particular wavelength.

To do that, we needed to correct for patient refractive error—meaning that for people who wear glasses, we wanted our lens to have the optical power of lenses used to correct for vision—for a range of myopic (nearsighted) and hyperopic (farsighted) conditions.

And we wanted to be able to get close enough to the eye to see that the device is aligned, but far enough away so we’re not banging into eyebrows or eyelashes.

When imaging the front of the eye, there’s a tradeoff between lateral resolution and effective imaging depth. We needed a sufficient depth of focus to permit imaging of at least the iris and the eye’s anterior crystalline lens.

Those were all the things we really wanted—and it was fairly ambitious.

Zemax: So how did you make it all happen?

Nankivil: It starts with the engine. Again, I had some experience on the projects I mentioned previously. The fastest OCT imaging speeds demonstrated to date have been achieved using swept-source lasers. I had been working with swept-source OCT that was about five times faster than most commercial systems—a 100khz source laser. Now we would use that engine to build a smaller, lighter, rapid-switching model.

A company called Mirrorcle Technologies had come out with tiny two-axis scanning MEMS (microelectromechanical systems) mirrors. These dual-axis micromirrors scan in two directions using actuators to rotate the mirrors. This is a different mechanism than that employed in standard galvonometers we used before. These tiny scanners are lightweight, allowing us to replace something that weighed hundreds of grams with something that weighed tens of grams.

With the laser selected and the scanner selected, we moved to the optical design. That’s where Zemax came in.

Zemax: How did the OpticStudio™ software help you here?

Nankivil: As I said, thanks to the previous project, we already had some experience with the posterior segment optical design—for imaging the back of the eye. We employed a well-known technique called lens splitting to break up the overall refractive power of effective combinations of lenses to achieve better optical performance. So rather than have one high-power lens, you can have two lower-power lenses making up one compound lens.

We used OpticStudio™ to design this lens and to keep it small, while also constraining the cost. Using the software, I could keep adding more glass to continue to improve performance, and conduct analysis to look at the tradeoff between complexity of design and performance.

We can design multiple solutions and figure out that sweet spot between cost/complexity and performance. We can decide when to push for higher performance or tighter tolerances—and pay a higher price—and when it’s satisfactory at a lower tolerance.

With OpticStudio’s merit function, we could experiment with both custom and off-the-shelf lenses, analyzing how the lenses we might want to make compare with commercial lens systems. In our case, for the posterior segment design, we found we could use commercial lenses and satisfy our performance parameters. Everyone’s eyes are different, but we developed our own eye model using data from literature to simulate how an average human eye behaves. We added a motorized adjustment to control the space between lens pairs, to correct for patient refraction. This way, the patient does not need to wear reading glasses while being imaged.

To do this, we created eye models to simulate the range of refractive error that is common in the population and then optimized our design across the entire set of model eyes.

Next came the anterior segment design. This is where we had to get really innovative. We wanted to create telecentric scanning across the front of the eye, but when we image the back of the eye, we scan a beam across the retina. To do this, we deliver a beam that pivots about the pupil. The parallel rays enter the eye and then focus on the retina, and as the angle of the mirror is scanned, we scan across the retina.

Sharing the same lenses, our system transforms this beam from a collimated beam to a focused beam. Instead of pivoting around the pupil, the anterior scan focuses on the pupil. And as the angle of the scanning mirror is changed, the beam scans across the front of the eye, focusing all the while in a single plane. That’s “object-side telecentric scanning.”

Then we added a fold mirror system. This fast-flipping mirror mechanism rapidly flips the mirror out of the way, allowing us to very quickly obtain an image of the back of the eye, and in a very small package. We used the constraint of the fold mirror as part of the cost function in our optical design.

This was the most thoroughly explored aspect from a design perspective. We tried single doublet, air-spaced doublet, triplet, air-spaced triplet, and the pair of acromats you see now. We also tried the acromatic pair with a field flattener. Each time we moved up in that list we got more performance, but we decided that the field flattener wasn't worth the enhancement.

With acromatic doublets, you have two types of glass in a single lens to reduce chromatic aberration. This improves the optical performance of a system while keeping the form factor small. This ended up being the best tradeoff between performance and cost.

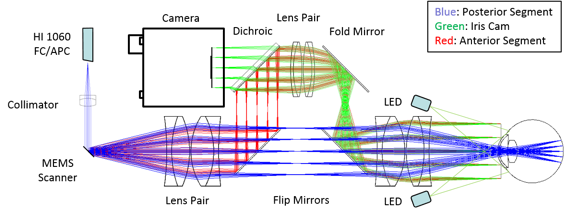

Handheld probe optical design: blue, red, and green rays depict the posterior segment, anterior segment and iris camera collection paths, respectively.

Handheld probe optical design: blue, red, and green rays depict the posterior segment, anterior segment and iris camera collection paths, respectively.

Zemax: So the software helped keep your costs down?

Nankivil: Absolutely. With anything that’s custom-designed, we have to consider manufacturability. I can design multiple solutions and figure out that sweet spot between cost/complexity and performance. We can decide when to push for higher performance or tighter tolerances—and pay a higher price—and when it’s satisfactory at a lower tolerance.

I designed more complex system solutions to this problem than the one we went with. I designed six systems in all—but after taking both tolerancing and cost into account, we ended up going with the fifth most complex.

Glass type, thickness and curvature all have uncertainties due to the manufacturing process. We made models of the positional uncertainty of all the elements that might result from a combination of both optical and mechanical manufacturing tolerances. OpticStudio’s tolerancing features allowed us to make sure the design was robust enough given the mechanical and optical tolerances.

A doctor in the U.S. could theoretically be viewing a patient’s eye in Afghanistan and say “move ten degrees, I need to get a good look…

Zemax: You also used OpticStudio™ to develop the iris camera in the probe.

Nankivil: Yes. This part was new for me, and maybe overkill for the project, but it was a great learning experience. For the iris camera we wanted to have uniform illumination, as best we could, in the plane of the iris. So we used a non-sequential feature to perform what’s called a Monte Carlo simulation.

This built-in feature allows you to simulate a light source. You use random sampling to obtain a numerical result regarding light distribution. I used it as an optimization tool to maximize uniformity on the object plane. I was able to optimize the angles of the LEDs and mount them to get the most uniform illumination.

I optimized each of these systems in about one hour. I experimented with two vs. four vs. eight LEDs, and found that four gave us enough spatial uniformity across the iris when directed at the optimum angle.

Our current model contains a simple commercial camera, the smallest camera available on the market with a large sensor, needed to obtain the required field of view given the relay optics I designed.

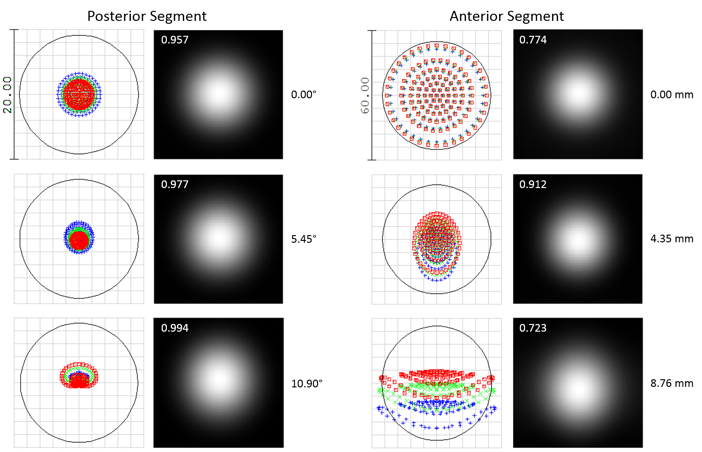

OpticStudio™ produced nice spot diagrams and point spread functions in the image plane, which allowed us to simulate the optical performance of the device. We were able to get really good performance across the entire field of view. In this figure, you can see that the performance is at or near the diffraction limit across the entire field of view.

Spot diagrams and Huygens point spread functions (PSFs) for the posterior (left) and anterior segment (right) SSOCT illumination.

Spot diagrams and Huygens point spread functions (PSFs) for the posterior (left) and anterior segment (right) SSOCT illumination.

So we really took advantage of a wide range of OpticStudio’s features when doing the optical design.

Zemax: After all that optical design, then you had to do mechanical design.

Nankivil: Yes, and OpticStudio™ plays very nicely with Solidworks, the mechanical design software we used. All the custom components were designed there. Then finally an enclosure is printed, with ergonomics in mind.

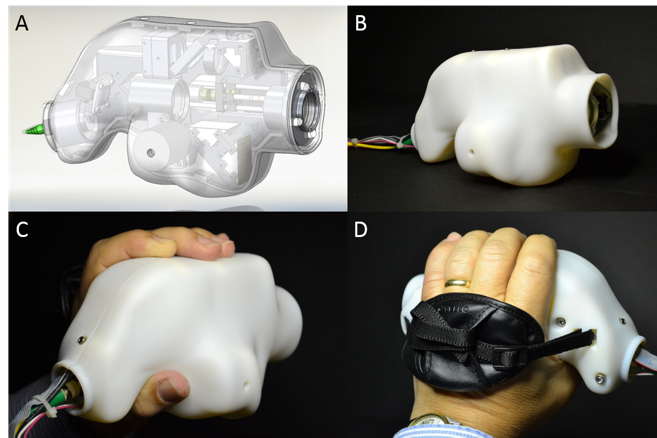

Handheld SSOCT probe internals as well as in its enclosure during handheld operation.

Handheld SSOCT probe internals as well as in its enclosure during handheld operation.

Zemax: What’s happening with the probe now?

Nankivil: Now we’re assessing clinical applicability. We did preliminary imaging in healthy volunteers to demonstrate that it can work. That's where our third research paper leaves off. But the probe has continued use beyond that and is now ready for testing in the emergency department at Duke University Hospital, as part of a bigger study to see if it can be used to assess damage to the optic nerve head.

Duke emergency gets a few patients every day who have eye issues. In the ER, the majority of these cases are either conjunctivitis (pink eye) or trauma. Now, patients can get imaged with this probe. There’s a special eye room in the emergency department. We plan to image the front and back of the eye in 50 subjects.

The probe is not yet out in field hospitals with military personal, but we’ve achieved a significant step towards that goal. It usually takes many iterations to get a final product in application. We’re still in the first trial phase.

One cool thing is we’ve added a cell phone to the back of the probe, so an operator can control the probe through the cell phone in real time and get 3D renderings. They (or a remote collaborator) can click on the image and rotate it in 3D, and scroll through a stack of images—all while more images are acquired in real time.

The probe is small and light, but it does have an umbilical cord—it can go about six feet from a cart that has a computer and engine. It’s remotely operable with a web-based user interface—someone can log in and remotely see what's happening or even control the device. So a doctor in the U.S. could theoretically be viewing a patient’s eye in Afghanistan and say “move ten degrees, I need to get a good look…”

The handheld paradigm also opens up potential in clinical situations with young children, elderly, or in pre-op. If a patient is not able to sit up and comfortably or reasonably use a chin rest for any reason, the probe allows a patient to be lying down. This will be really convenient in surgical situations, or with babies.

We have demonstrated all these capacities, and it’s incredibly exciting to look toward the long-term goal of improving diagnostics and vision outcomes.

Acknowledgments

This research was supported by the U.S. Army Medical Research and Material Command (USAMRMC Award # W81XWH-12-1-0397) and the Fitzpatrick Foundation Scholar Award (DN).

Some of the research referenced and the images shown in the above article were originally published in these papers:

D Nankivil, G Waterman, F LaRocca, B Keller, AN Kuo, JA Izatt

Biomedical Optics Express 6 (11), 4516-4528

F LaRocca, D Nankivil, S Farsiu, JA Izatt

Biomedical Optics Express 5 (9), 3204-3216

F LaRocca, D Nankivil, S Farsiu, JA Izatt

Biomedical Optics Express 4 (11), 2307-2321